One month ago, Apple released the Vision Pro, and with it, the ability to record and playback spatial (and immersive) video. The Apple TV app includes a set of beautifully produced videos giving viewers the chance to sit in the studio with Alicia Keys, visit the world’s largest rhino sanctuary, and perhaps most stunningly, follow Faith Dickey as she traverses a “highline” 3,000 feet above Norway’s fjords. These are videos that envelop the viewer with ~180 degrees of wraparound content and provide a very strong sense of presence.

It isn’t too hyperbolic to say that immersive video — when done right — makes you feel like you’ve been teleported to a new location. While you might “have seen” a place in a traditional flat video, with immersive video, you’ve “been there.” If you haven’t experienced video like this, I’m jealous, because there’s nothing like the first time. If you own a Vision Pro and haven’t watched these yet, stop reading and do it now!

What readers of my admittedly sparse blog might not know is that I worked for an immersive video startup in Seattle (called Pixvana) for four full years starting in early 2016. I helped build our cross-platform video playback SDK, the on-device video players themselves, the in-headset authoring, editing, and scripting tools, a viewport-adaptive streaming technology, some novel frame mapping techniques, and the transformation, encoding, and streaming pipeline that we ran in the cloud. It was an exciting and challenging time, and looking back, I’m surprised that I never posted about it. I loved it!

The launch of the Apple Vision Pro has reignited interest in these formats, introducing them to an audience that was previously unaware of their existence. And as a huge fan of immersive video myself, I had to dig-in.

Context

As useful context, Apple acquired one of the very few startups in the space, NextVR, back in 2020. While we (at Pixvana) were focused on pre-recorded, on-demand content and tooling, NextVR was focused on the capture and streaming of live events, including sports and music. I was a fan and user of their technology, and we were all in a situation where “a rising tide lifts all boats.” It looks like Apple is planning to continue NextVR’s trajectory with events like the MLS Cup Playoffs.

I could probably write a books-worth of content on lessons learned regarding the capture, processing, encoding, streaming, and playback of spatial media (both video and audio). While it all starts with the history and experience of working with traditional, flat media, it quickly explodes into huge frame dimensions, even larger master files, crashing tools, and mapping spherical immersive content to a rectangular video frame.

Then, because viewers are experiencing content with two huge magnifying lenses in front of their eyes, they inadvertently become pixel-peepers, and compression-related artifacts that may have been acceptable with flat media become glaring, reality-busting anomalies like glitches in the matrix. Oh, did I forget to mention that there’s now two frames that have to be delivered, one to each eye? Or the fact that 60 or even 90 frames-per-second is ideal? It’s a physics nightmare, and the fact that we can stream this reality over limited bandwidth to a headset in our home is truly amazing. But I digress.

Terminology

First, let’s get some terminology out of the way. While I haven’t seen any official Apple definitions, based on what I’ve read, when Apple talks about spatial video, they’re referring to rectangular, traditional 3D video. Yes, there is the opportunity to store additional depth data along with the video (very useful for subtitle placement), but for the most part, spatial videos are videos that contain both left- and right-eye views. The iPhone 15 Pro and the Vision Pro can record these videos, each utilizing its respective camera configuration.

When Apple talks about immersive video, they appear to be referring to non-rectangular video that surrounds the viewer; this is actually a very good term for the experience. Videos like this are typically captured by two (or more) sensors, often with wide-angle/fisheye lenses. The spherical space they capture is typically mapped to a rectangular video frame using what is known as an equirectangular projection (think about a map of the Earth…also a spherical object distorted to fit into a rectangle). Apple’s own Immersive videos are mapped using a fisheye projection to cover an approximately 180-degree field of view.

Resolution

The other bit of terminology that matters, especially when talking about stereo video, is video resolution. For traditional 2D flat formats, it is common to refer to something as “4K video.” And to someone familiar with the term, 4K generally means a resolution of 3840×2160 at a 16:9 aspect ratio. 8K generally means 7680×4320 at a 16:9 aspect ratio. Notice that the “#K” number comes from the horizontal resolution (-ish) only.

When talking about stereo video, the method that is used to store both the left- and right-eye views matters. In a traditional side-by-side or over/under format, both the left- and right-eye images are packed into a single video frame. So, if I refer to a 4K side-by-side stereo video, am I referring to the total width of the frame, meaning that each eye is really “2K” each? Or am I saying that each eye is 4K wide, meaning the actual frame is 8K wide? Unless someone is specific, it’s easy to jump to the wrong conclusion.

To add to this, Apple Immersive Video is described as “180-degree 8K recordings.” Because the MV-HEVC format doesn’t store left- and right-eye views side-by-side in a single image, the frame size reported by video tools is actually the resolution for each eye. So, does Apple intend to mean that each eye is 8K wide?

Well, if you monitor your network traffic while playing Apple Immersive videos, you can easily determine that the highest quality version of each video has a resolution of 4320×4320 per eye at a 1:1 aspect ratio. Wait a minute! That sounds like 4K per eye at first glance. But then, notice that instead of a wide 16:9 frame, the video is presented as a square, 1:1 frame. And if you squint just right, 4K width + 4K height might lead to something like an 8K term. Or maybe Apple means that if you did place them side-by-side, you’d end up with something like an 8K-ish video?

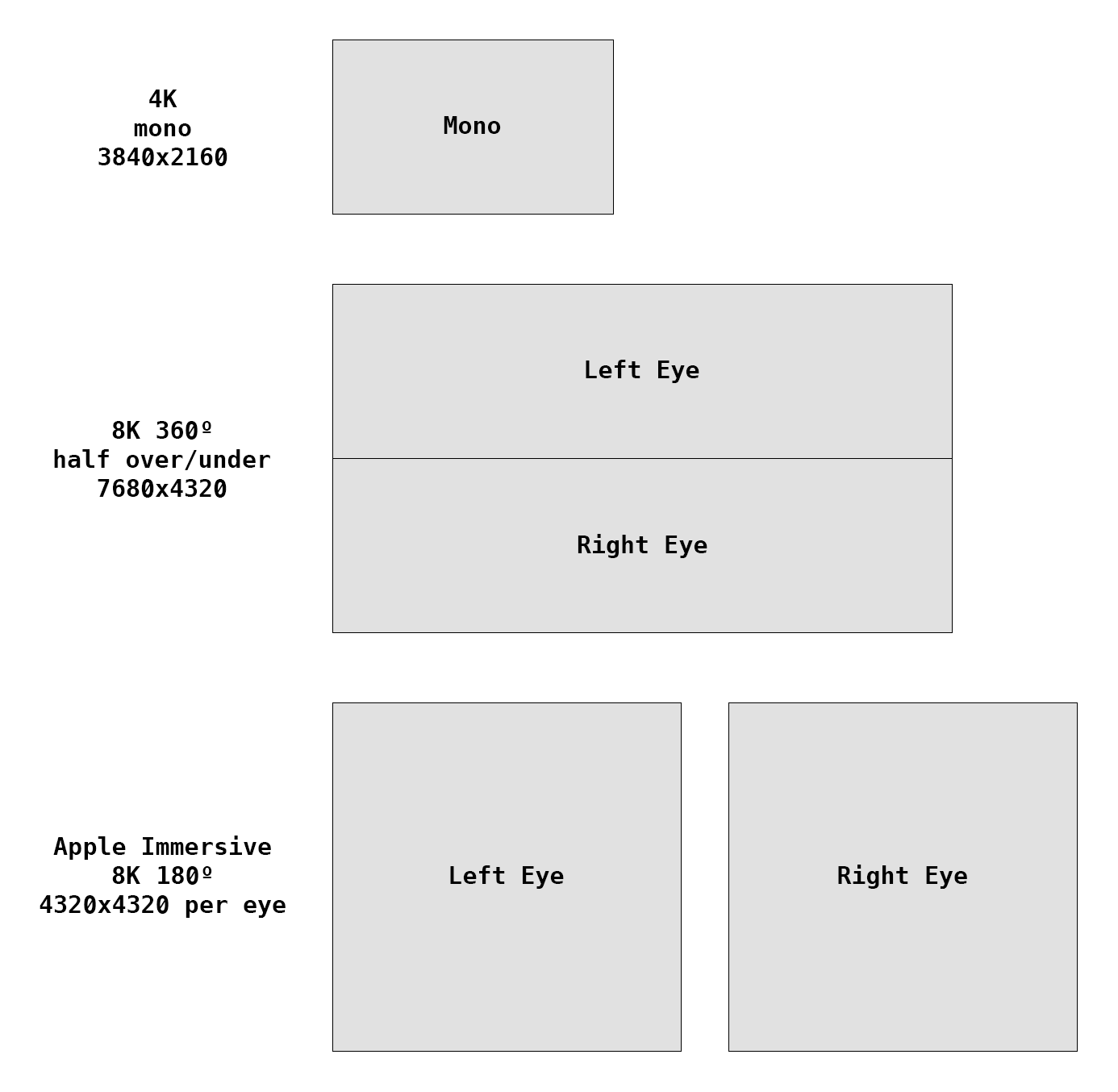

Some various frame sizes and layouts for comparison (to scale):

The confusion around these shorthand terms is why I like to refer to the per-eye resolution of stereo video, and I like to be explicit about both the width and height when it matters.

Before I leave the topic, I’ll also note that Apple’s Immersive Video is presented at 90 frames-per-second in HDR10 at a top bitrate around 50Mbps. The frames are stored in a fisheye projection format, and while I have some ideas about that format, until Apple releases more information, it’s only speculation.

MV-HEVC

Apple has decided to use the multiview extensions of the HEVC codec, known as MV-HEVC. This format encodes multiple views (one for each eye) using the well-known and efficient HEVC codec. For many reasons beyond the scope of this article, I think this is a fantastic choice. Earlier versions of the multiview technique have been used to encode 3D Blu-ray discs. The biggest challenge with MV-HEVC is that — even though the format was defined years ago — there is almost no tooling that works with it (and yes, that includes the ubiquitous ffmpeg).

Fortunately, Apple has provided support for MV-HEVC encoding and decoding in AVFoundation. It seems very probable that tools like ffmpeg, Adobe Premiere, DaVinci Resolve, Final Cut Pro and others will add support eventually, but as of this writing, Apple provides the only practical solution. For completeness, I am aware of other projects, and I hope that they’re announced at some point in the future.

Necessity

When my Apple Vision Pro arrived, I wanted to see how our older Pixvana footage looked in this new higher-fidelity headset. So, I hacked together a quick tool to take a flat, side-by-side or top/bottom video and encode it using MV-HEVC. The lack of detailed documentation made this more difficult than expected, but I was able to get it working.

When I tried to play a properly-encoded immersive (equirectangular) video in the Apple Vision Pro Files and Photos apps, they played back as standard, rectilinear spatial video. Yes, they had 3D depth, but they were displayed on a virtual movie screen, not wrapped around me like an immersive video should. After chatting with others in the immersive video community, we’ve all concluded that Apple currently provides no built-in method to playback user-created immersive video. Surprising, to be honest.

To work around this limitation, I built a bare-bones MV-HEVC video player to watch my videos. When I could finally see them in the Vision Pro, they looked fantastic! That led to a bunch of experimentation, with others in the community sending me increasingly large video files at even higher frame rates. While we haven’t identified the exact limitations of the hardware and software, we’ve definitely been pushing the limits of what the device can handle, and we’re learning a little bit more every day.

Stopgap

As a stopgap, I released a free command-line macOS version of my encoding tool that I just call spatial. There’s more on the download page and in the documentation, but it can take flat, side-by-side or top/bottom video (or two separate video files) and encode them into a single MV-HEVC file while setting the appropriate metadata values for playback. It can also perform the reverse process and export the same flat video files from a source MV-HEVC file. Finally, it has some metadata inspection and editing commands. I’ve been very busy addressing user feedback, fixing bugs, and adding new features. It’s fun to see all of the excitement around this format!

As I’ve interacted with users of my tool (and others), there’s been general frustration, confusion, and surprise around how to play these new video files on the Vision Pro. Developers are struggling to find the right documentation, understand the new format, and figure out how to play these videos in the headset. We’ve been waiting on third-party players as they also experiment and update their App Store offerings.

To address the playback issue, I’ve released an example spatial player project on GitHub that demonstrates how to read the new spatial metadata, generate the correct projection geometry, and playback in stereo or mono. It’s my hope that this is just enough to unblock any developers who are trying to make a video playback app. I also hope that creators can finally see what their immersive content can look like in the Vision Pro! I’ve been sent some stunning footage, and I’m always excited to experience new content.

Perhaps this is enough to hold us over until WWDC24, where I hope that Apple shares a lot more about their plans for third-party immersive media.

Documentation

At this point, I’ve spent a lot of time digging-in to this new format, and along the way, I’ve discovered information that isn’t captured or explained in the documentation. So while it’s not my intention to write official documentation, it is important to share these details so that others can make progress. Just keep in mind that these undocumented values may change, and some of them are based on my interpretation.

In the 0.9 (beta) document that describes Apple HEVC Stereo Video from June 21, 2023, Apple describes the general format of their new “Video Extended Usage” box, called vexu. Unfortunately, this document is out-of-date and doesn’t reflect the format of the vexu box in video that is captured by iPhone 15 Pro or Vision Pro. It is still worth reading for background and context, though, and I hope that we’ll see an updated version soon.

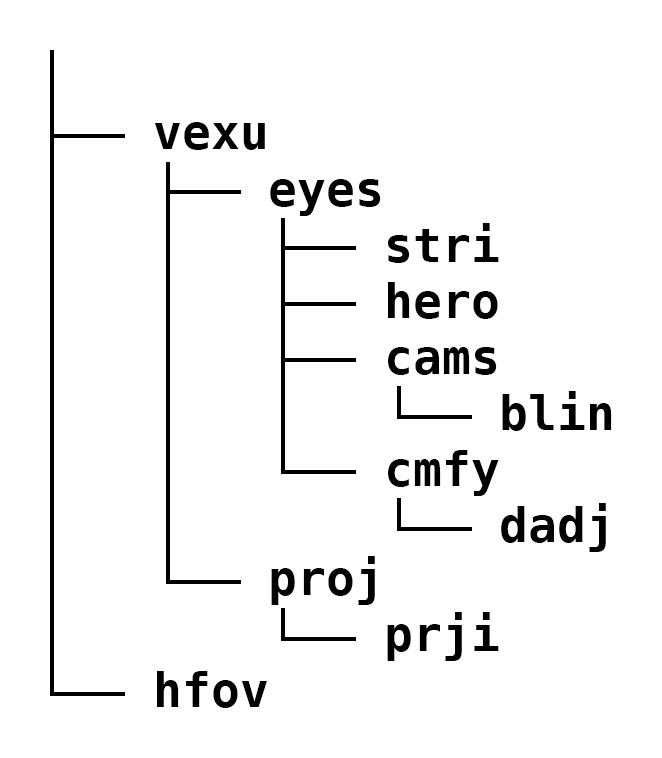

Below is the actual structure of the metadata related to spatial and immersive video, and it is found in the video track under the visual sample entries. If you want to investigate this metadata, I’d direct you to my spatial tool or something like mp4box. Normally you’d want to use AVFoundation consts and enums to set and read these values, but for those who want to know more:

Most of these boxes are optional, and they’ll only appear if they’re used. Here’s what I know (with some guesses about what the names might mean):

- vexu – top-level container for everything except hfov

- eyes – eye-related container only

- stri – “stereo view information,” contains four boolean values:

- eyeViewsReversed – the order of the stereo left eye and stereo right eye are reversed from the default order of left being first and right being second

- hasAdditionalViews – one or more additional views may be present beyond stereo left and stereo right eyes

- hasLeftEyeView – the stereo left eye is present in video frames (kVTCompressionPropertyKey_HasLeftStereoEyeView)

- hasRightEyeView – the stereo right eye is present in video frames (kVTCompressionPropertyKey_HasRightStereoEyeView)

- hero – contains a 32-bit integer that defines the hero eye:

- 0 = none

- 1 = left

- 2 = right

- cams – perhaps “cameras,” container only

- blin – perhaps “baseline,” contains a 32-bit integer (in micrometers) that specifies the distance between the camera lens centers

- cmfy – perhaps “comfy,” container only

- dadj – perhaps “disparity adjustment,” contains a 32-bit integer that represents the horizontal disparity adjustment over the range of -10000 to 10000 (that maps to a range of -1.0 to 1.0)

- proj – perhaps “projection,” container only

- prji – perhaps “projection information,” contains a FourCC that represents the projection format:

- rect = rectangular (standard spatial video)

- equi = equirectangular (assume this means 360 degrees, immersive video)

- hequ = half-equirectangular (assume this means ~180 degrees, immersive video)

- fish = fisheye (mapping unclear, immersive video, format for Apple’s Immersive videos in Apple TV)

- hfov – contains a 32-bit integer that represents the horizontal field of view in thousandths of a degree

In AVFoundation, these values are found in the extensions for the format description of the video track.

While the projection values are defined as CMProjectionTypes, the key that defines the projection in the media extensions appears to be undocumented (at least for now). Between you and me, the key is “ProjectionKind”.

Also, to have media labeled as “spatial” (and to playback as such) in the Files and Photos apps on Vision Pro, the horizontal field of view (–hfov in my spatial tool) needs to be set along with either the camera baseline (–cdist in my tool) or the horizontal disparity adjustment (–hadjust in my tool).

If you want the aspect ratio of your spatial videos to be respected during playback in Files and Photos, you should set the projection to rectangular (–projection rect in my tool). Otherwise, your video might playback in a square-ish format.

The horizontal field of view value seems to be used during playback in the Files and Photos apps to size the virtual screen so that it takes up the specified number of degrees. I’m sure there are lower and upper limits, and someone will figure them out.

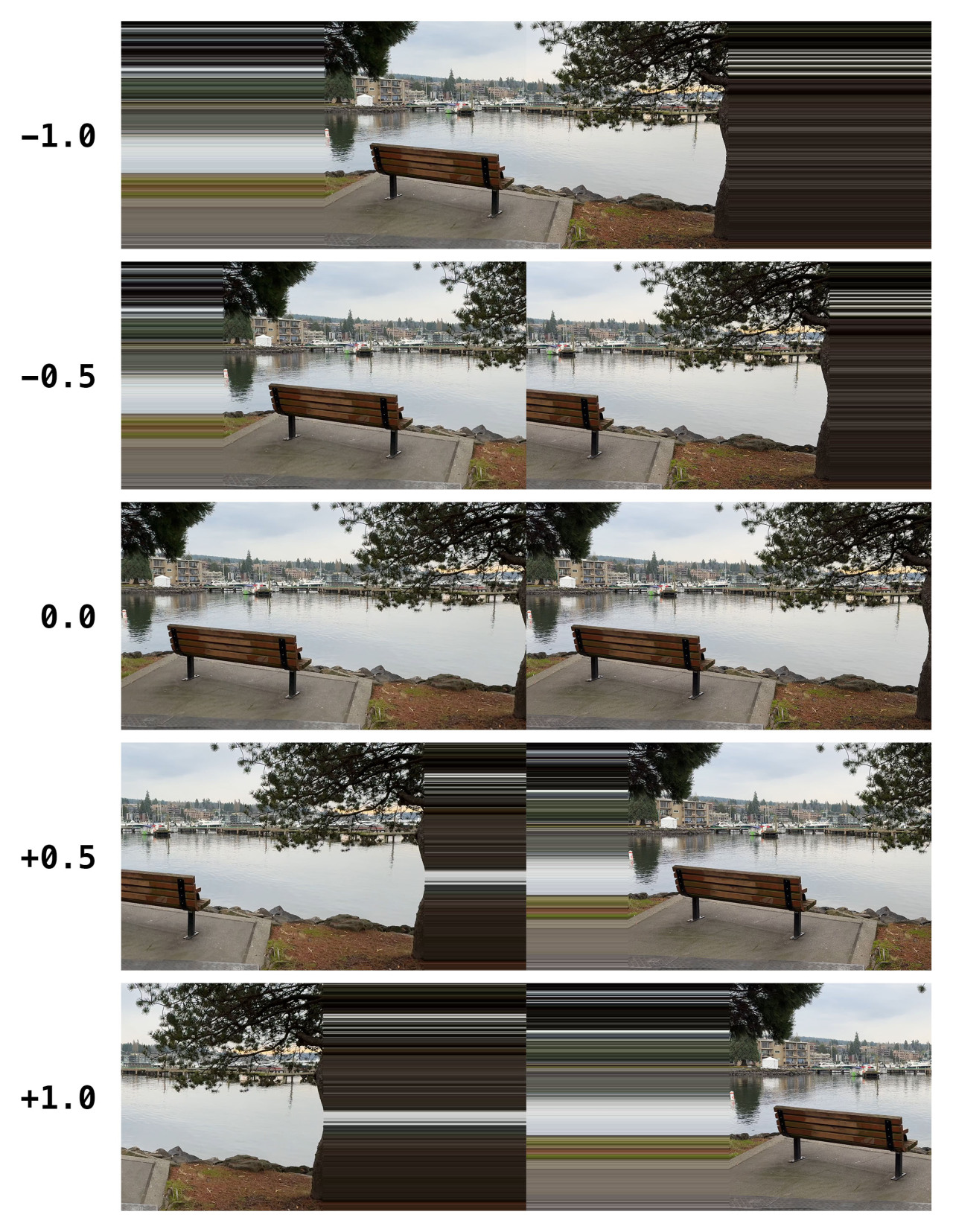

This graphic illustrates the effect of setting different horizontal disparity adjustment values for stereo rectilinear playback on Apple Vision Pro (while I’ve simulated this graphic, the pixel smearing looks like this during playback). As expected, positive values increase the separation between the two eyes, making the depth appear more pronounced. Obviously, these are extreme test values to illustrate the effect; normal values are typically very small.

Here are the metadata values for the Apple Vision Pro and iPhone 15 Pro for reference:

Apple Vision Pro

- Camera baseline: 63.76mm

- Horizontal field of view: 71.59 degrees

- Horizontal disparity adjustment: +0.0293

- Projection: rectangular

iPhone 15 Pro

- Camera baseline: 19.24mm

- Horizontal field of view: 63.4 degrees

- Horizontal disparity adjustment: +0.02

- Projection: rectangular

Limitations

I receive multiple messages and files every day from people who are trying to find the limits of what the Apple Vision Pro is capable of playing. You can start with the 4320×4320 per-eye 90fps content that Apple is producing and go up from there. I’ve personally played up to “12K” (11520×5760) per eye 360-degree stereo video at 30fps. For what it’s worth, that is an insane amount of data throughput and speaks to the power of the Vision Pro hardware!

I’ve had my Vision Pro crash and reset itself many times while attempting to playback some of these extra large videos. I’ve even had it crash and reset with more reasonable (but still large) videos, so clearly there are some issues to fix.

On the encoding side, it takes a lot of horsepower to encode frames that are this large. Video encoding works by comparing frames over time and storing movements and differences between pixels to save bandwidth. To do this, a video encoder not only has to retain multiple frames in memory, but it has to perform comparisons between them. With frames that can easily be 100MB or more in size, a fast machine with lots of memory is your best friend. Even my M1 Ultra Mac Studio is brought to a crawl when encoding the aforementioned 12K content.

Conclusion

I hope that this tour through what I’ve learned about Apple Vision Pro’s support for spatial and immersive media has been insightful. And I hope that my spatial command-line tool and related video player example on GitHub are useful to interested developers and content creators.

As always, if you have questions, comments, or feedback…or if you just want to share some interesting spatial media files, I’d love to hear from you.

Leave a Reply