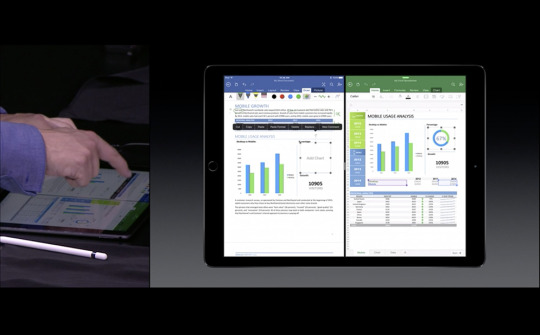

If you watched Apple’s September 9th Special Event when they introduced the upcoming iPad Pro, you may remember the segment around 35:05 where Microsoft demonstrated Excel and Word side-by-side using the new iOS 9 Split View feature.

“So having these two applications side-by-side is a huge productivity boost…and allows us to do things like copy and paste this chart that you see and put it into Microsoft Word.”

I don’t know about you, but I was excited to see the first reveal of drag-and-drop across Split Views on an iOS device! But then, like the destruction of Alderaan, I heard the collective gasp of iOS developers everywhere—as if millions of voices suddenly cried out in terror and were suddenly silenced—while we watched one of the presenters simply copy the chart from Excel and paste it into Word.

Fast-forward to this past Thursday, when I was eating lunch with a friend. We were talking about this segment of the presentation, and I started to wonder if there would be any way to pull off the drag-and-drop that we all expected. It was time for some experimentation.

The first question that I needed to answer was whether or not a touch that begins in one window continues to be tracked across the Split View. If you’ve ever worked with touch events in UIKit, you’ve probably noticed that a touch is tracked relative to its initial view. This is true even if your finger is dragged outside of the view’s bounds. But surely the touch would be cancelled if your finger crossed the Split View into another running process, right? After a few minutes in Xcode it was easy to demonstrate that yes, touch events continue to be delivered to the initial view, even when your finger is dragged across the splitter.

Because there is no method to “hand off” a touch event to another app, I knew that the rest of the gesture would rely on communication between the two running processes. I decided to use system-wide Darwin Notifications, and in particular, the fantastic MMWormhole project on GitHub. MMWormhole provides a convenient wrapper for Darwin notifications while also making it easy to pass data back-and-forth between the two processes. Unfortunately, system-wide Darwin notifications prohibit the use of userInfo-style data payloads, so other marshalling techniques are necessary.

At this point, I had everything I needed for my test. Here is a simple example showing a green UIView being dragged across the splitter.

Of course, in reality, nothing is being dragged across the splitter. The UIView in the left app is being moved out-of-view, and a corresponding UIView in the right app is being positioned based on a Darwin notification and data payload that contains the touch location translated to the coordinate system of the right app. A very useful illusion.

This is where I stopped, only because I have no use for this feature in my current apps. However, my experiment has shown that with a bit more work, this can be turned into a very useful project. Here are some thoughts and considerations that will need to be addressed:

- How do the collaborating apps determine the

offset of their respective coordinate systems? My test assumed a left-running

app and a right-running app. I’m not aware of a method to determine actual window

coordinates relative to screen coordinates, but if that exists, this is a

simple problem to solve (update below). If not, you can imagine some heuristics to get you

most—if not all—of the way there (e.g. a limited set of Split View sizes, a

known screen size, and known window sizes).

- What is the protocol for drag-and-drop handoff?

A simple protocol might assume 1) a beginDragWithThumbnail-style

event followed by 2) touch-tracking, ending with 3) an endDragWithData-style event to pass the shared data. Another method

could employ the system-wide

UIPasteboard to handle the copy and paste functionality, leaving the

protocol to handle the illusion.

- Can this work outside of an App

Group? My test worked because I am a single developer who is able to setup

a shared data container between the two running apps. Any developer (including

Microsoft) can use the same technique to make this work. But I wonder if there

is any reasonable way to pull this off across apps that don’t share an App

Group. The “live” nature of the touch events would make this very difficult for

a web service, for example.

If anyone does turn this into a reusable project, please let me know. Otherwise, it’s my hope that functionality like this is on Apple’s near-term radar. It’s something that’s obviously needed, especially with Split View.

Have fun!

Update: shortly after posting, I realized that you can use the UICoordinateSpace methods on UIScreen to determine actual coordinates for each participating app:

UIWindow *keyWindow = [UIApplication sharedApplication].keyWindow;

CGRect actualFrame = [[UIScreen mainScreen].coordinateSpace

convertRect:keyWindow.bounds

fromCoordinateSpace:keyWindow];

Leave a Reply